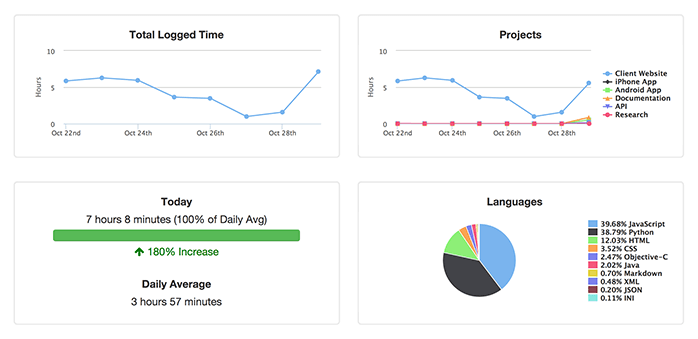

WakaTime’s infra is split across DigitalOcean and AWS. We use DigitalOcean Droplets for compute resources, AWS S3 to store code stats, and DigitalOcean Spaces for backups. You can find more info on this split infra decision in this blog post. The files we store in S3 are usually between 10KB and 50KB in size, and we store multiple terabytes of these files. We don’t use Spaces CDN, and our Spaces are located in the same region as our Droplets.

Over the years, we’ve noticed some latency differences between Spaces and S3. This is why WakaTime only uses Spaces for backups and S3 as our main database. Using S3 as a database did increase our Outbound Data Transfer costs, but we reduced those costs by caching S3 reads using SSDB, a disk-backed Redis-compatible database.

Latency of S3 vs Spaces

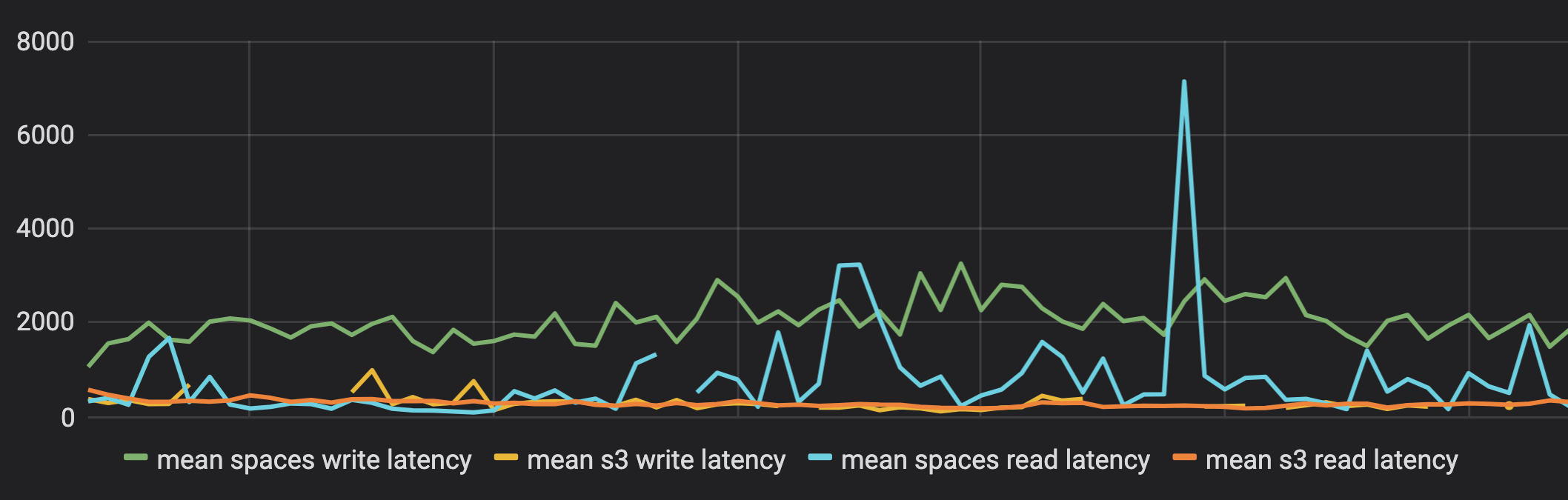

The first thing we noticed was writing to DigitalOcean Spaces takes much longer than writing the same objects to S3. Average write latency for S3 stays around 200ms when writing from DigitalOcean servers. When writing the same files from the same servers to DigitalOcean Spaces, average write latency is around 2 seconds!😱 This wasn’t that bad for our use case since we parallelize writes, but it means writing terabytes of data to Spaces can take days.

Read latency was more important for us. We notice consistently faster(lower) reads from S3 compared to Spaces. Reading from S3 takes around 200ms per object, while reading from Spaces takes around 300ms. We also noticed a higher rate of failures when reading from Spaces vs S3. Spaces rate limits your requests much more than S3. The S3 rate limit (5,500+ requests per second) gives you many times more requests per second than DigitalOcean Spaces (500 request per second).

We start each file path prefix with a random string generated per user, to prevent one user’s reads from bottlenecking reads of other users. According to AWS docs, this means we can read up to 5.5k files per second per user from S3. With Spaces, we can only read 500 files per second total across all users.

WakaTime’s Infra

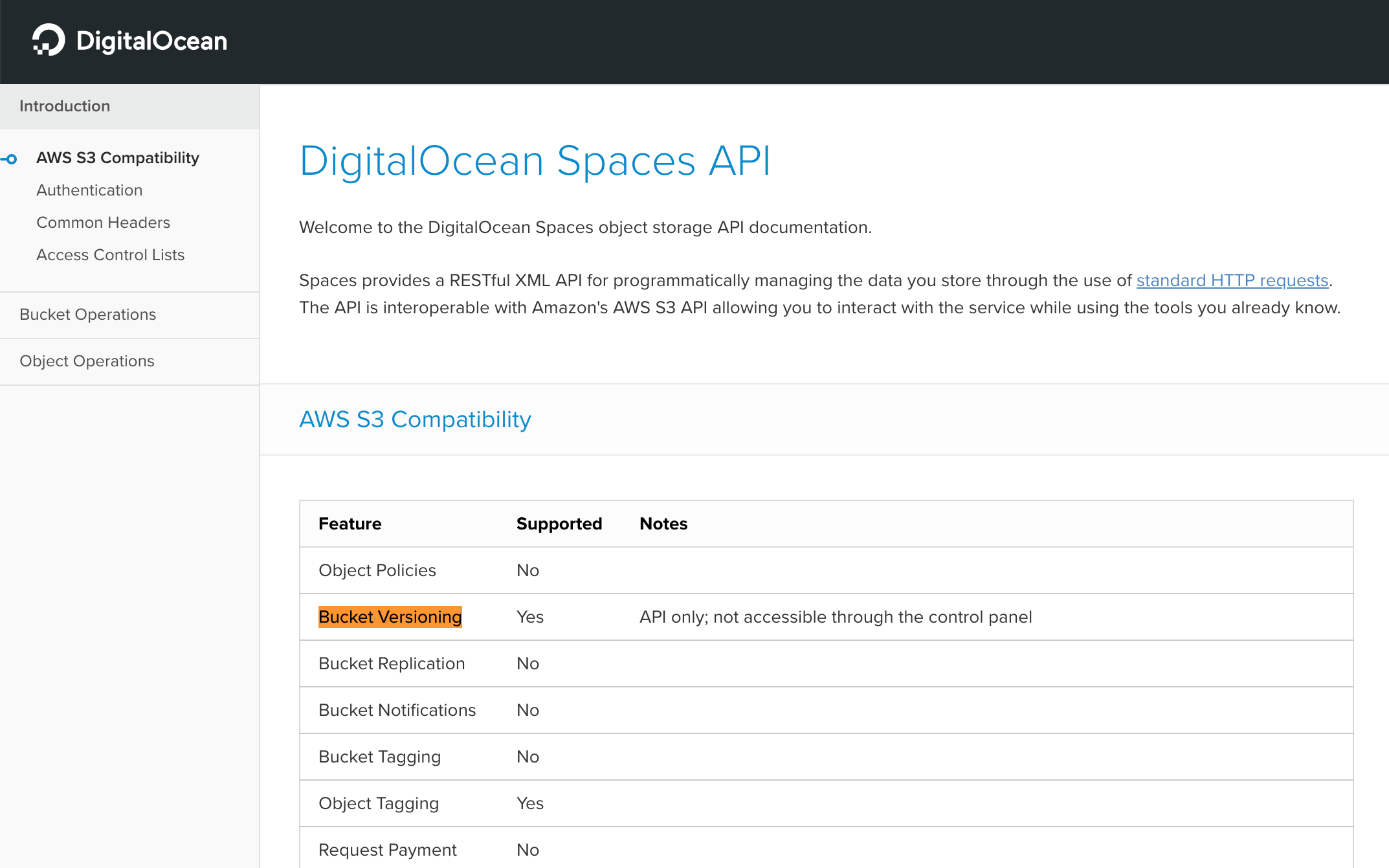

We’re now using S3 as our primary code stats database, with an SSDB caching layer, and multiple Postgres databases on DigitalOcean block storage volumes. Code stats are sent from WakaTime plugins to the WakaTime API and temporarily stored in Postgres, sharded by day. A background task runs on our RabbitMQ task queue to:

- move code stats from Postgres into S3

- warm the SSDB cache

- globally lock and drop the current shard from Postgres, after all it’s rows are in S3

A similar task also runs each day to backup the code stats into DigitalOcean Spaces. Our backups in Spaces are automatically versioned by DigitalOcean. If new code stats come in for the same S3 file, the change is replicated and versioned in the Spaces backup. DigitalOcean Spaces is priced very affordably and latency doesn’t matter as much for infrequent reads, making it a great place to store backups.

If you liked this post, you can browse similar articles using the devops tag. Get started with your free programming metrics today by installing the open source WakaTime plugin.

Alan Hamlett

Alan Hamlett